IEEE Transactions on Visualization and Computer Graphics

Real-time Cloth Rendering with Fiber-level Detail

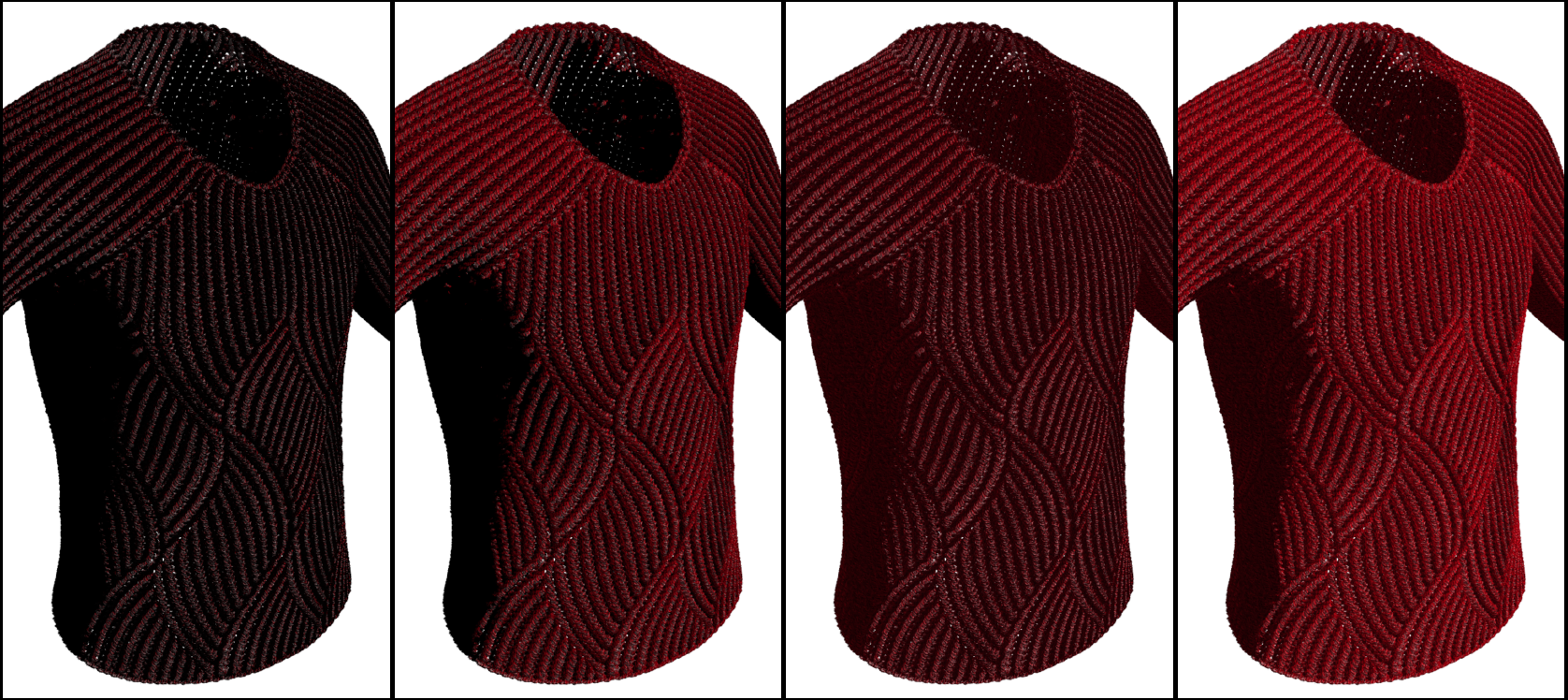

Specular Only Specular+Diffuse Specular+Ambient All Components

Abstract

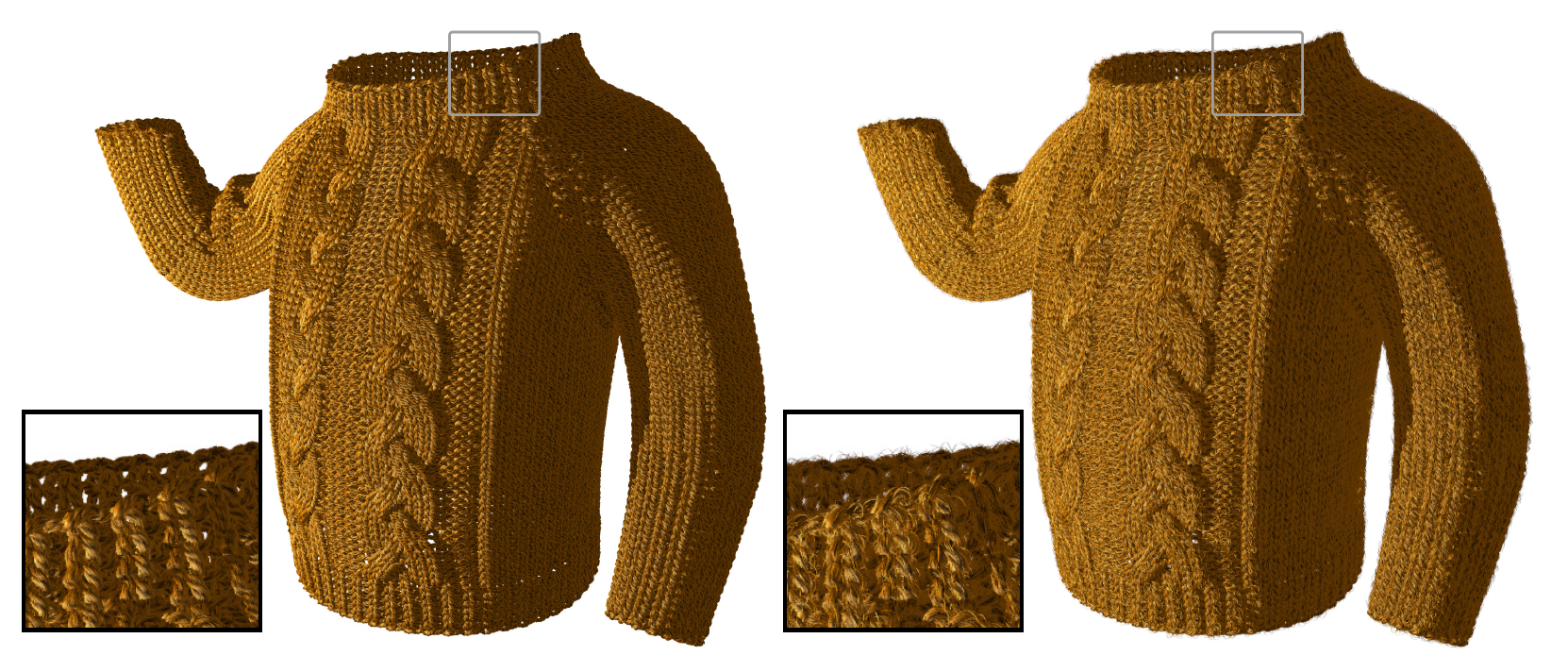

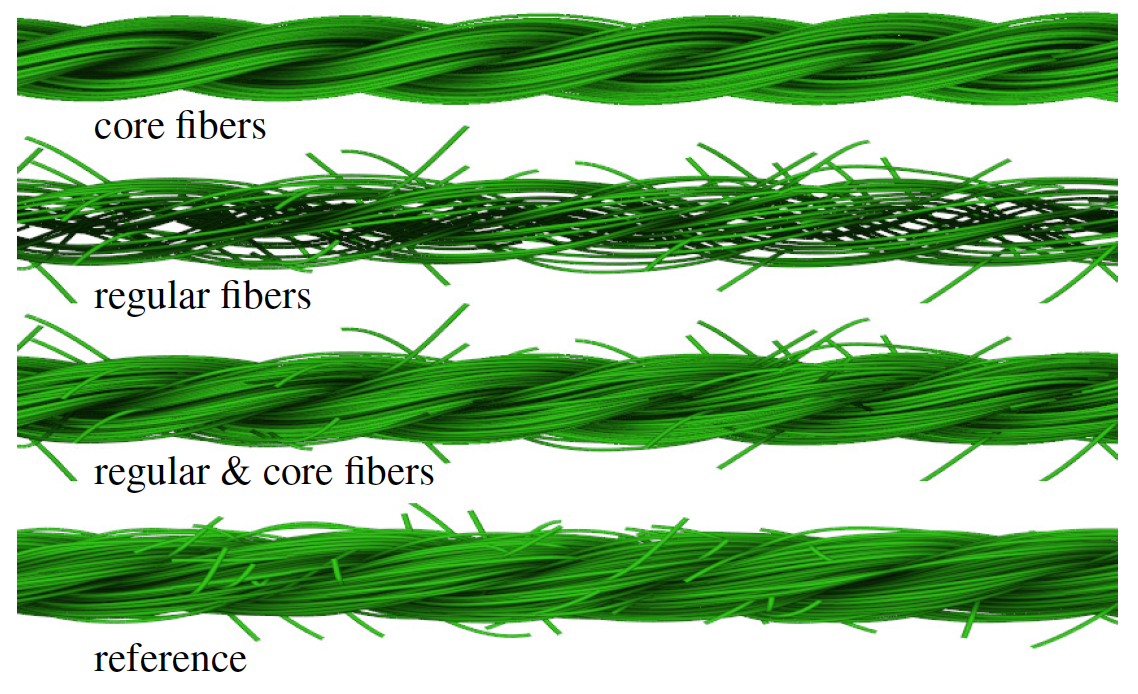

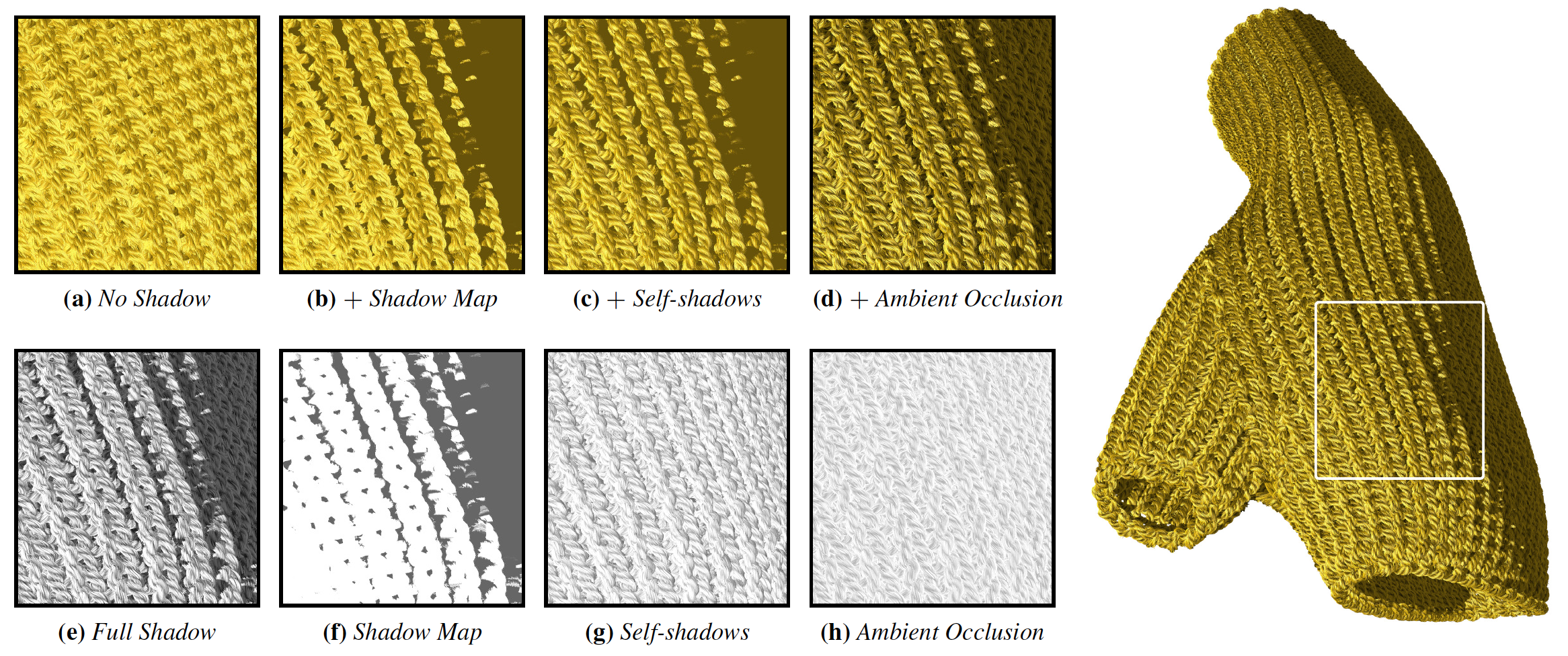

Modeling cloth with fiber-level geometry can produce highly realistic details. However, rendering fiber-level cloth models not only has a high memory cost but it also has a high computation cost even for offline rendering applications. In this paper we present a real-time fiber-level cloth rendering method for current GPUs. Our method procedurally generates fiber-level geometric details on-the-fly using yarn-level control points for minimizing the data transfer to the GPU. We also reduce the rasterization operations by collectively representing the fibers near the center of each ply that form the yarn structure. Moreover, we employ a level-of-detail strategy to minimize or completely eliminate the generation of fiber-level geometry that would have little or no impact on the final rendered image. Furthermore, we introduce a simple self-shadow computation method that allows lighting with self-shadows using relatively low-resolution shadow maps. We also provide a simple distance-based ambient occlusion approximation as well as an ambient illumination precomputation approach, both of which account for fiber-level self-occlusion of yarn. Finally, we discuss how to use a physical-based shading model with our fiber-level cloth rendering method and how to handle cloth animations with temporal coherency. We demonstrate the effectiveness of our approach by comparing our simplified fiber geometry to procedurally generated references and display knitwear containing more than a hundred million individual fiber curves at real-time frame rates with shadows and ambient occlusion.

Paper [Preprint] (PDF, 26.1Mb)

Data [Yarn-level Cloth Models]

Citation [Bibtex]

Demo