ACM Transactions on Graphics (Proceedings of SIGGRAPH 2025)

Real-Time Knit Deformation and Rendering

Tao Huang*,

Haoyang Shi*,

Mengdi Wang*,

Yuxing Qiu,

Yin Yang,

Kui Wu

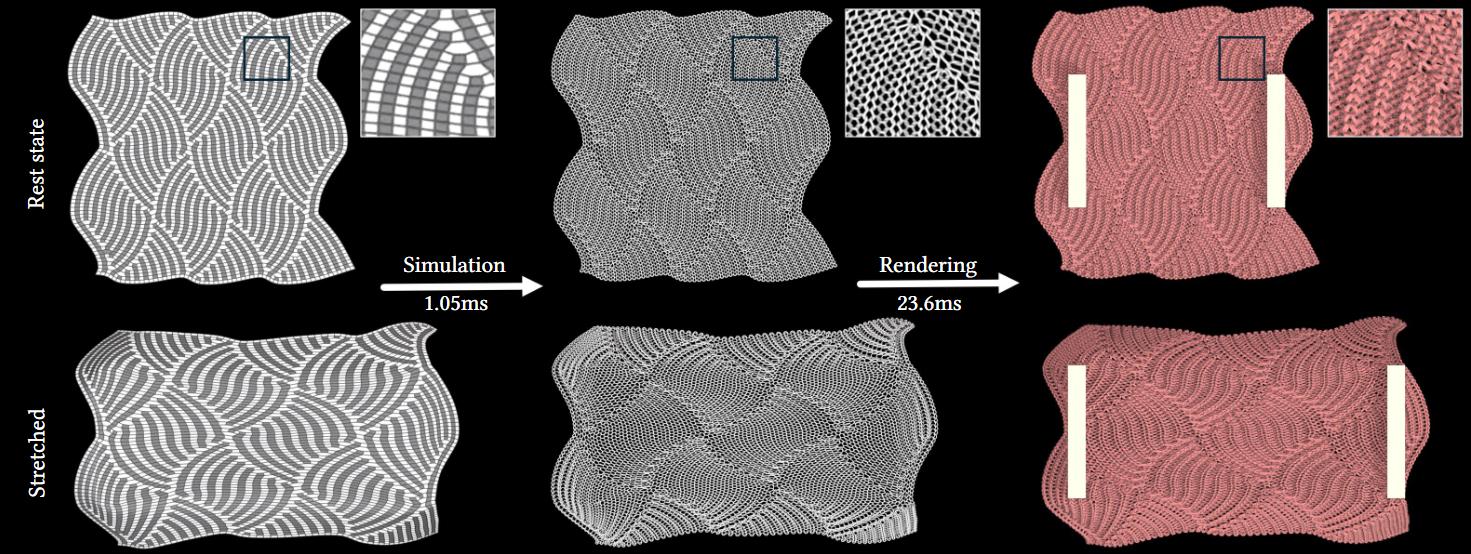

Given the animated stitch mesh with a non-periodic Flame pattern (left), our system produces faithful yarn geometry (middle) based on underlying mesh deformation and high-quality knit rendering with fiber-level details under environmental light (right). The simulation and rendering take 1.05 and 23.6 ms, respectively, on an Nvidia RTX3090.

Abstract

The knit structure consists of interlocked yarns, with each yarn comprising multiple plies comprising tens to hundreds of twisted fibers. This intricate geometry and the large number of geometric primitives present substantial challenges for achieving high-fidelity simulation and rendering in real-time applications. In this work, we introduce the first real-time framework that takes an animated stitch mesh as input and enhances it with yarn-level simulation and fiber-level rendering. Our approach relies on a knot-based representation to model interlocked yarn contacts. The knot positions are interpolated from the underlying mesh, and associated yarn control points are optimized using a physically inspired energy formulation, which is solved through a GPU-based Gauss-Newton scheme for real-time performance. The optimized control points are sent to the GPU rasterization pipeline and rendered as yarns with fiber-level details. In real-time rendering, we introduce several decomposition strategies to enable realistic lighting effects on complex knit structures, even under environmental lighting, while maintaining computational and memory efficiency. Our simulation faithfully reproduces yarn-level structures under deformations, e.g., stretching and shearing, capturing interlocked yarn behaviors. The rendering pipeline achieves near-ground-truth visual quality while being 120,000x faster than path tracing reference with fiber-level geometries. The whole system provides real-time performance and has been evaluated through various application scenarios, including knit simulation for small patches and full garments and yarn-level relaxation in the design pipeline.

Paper [Preprint]

Video

Results

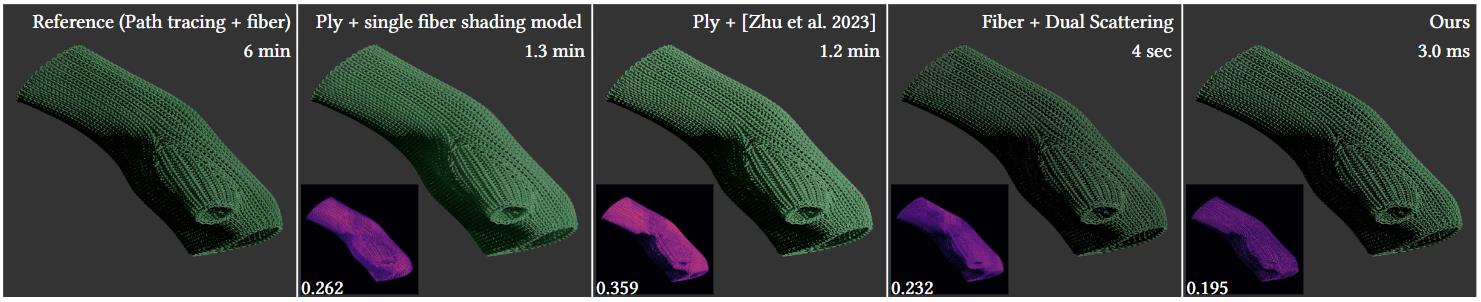

Comparison to previous rendering solutions. FLIP error maps are displayed at the bottom-left corner. Our method is at least three orders of magnitude faster and produces results nearly identical to ground truth with fiber-level geometry and path tracing with the lowest FLIP error.

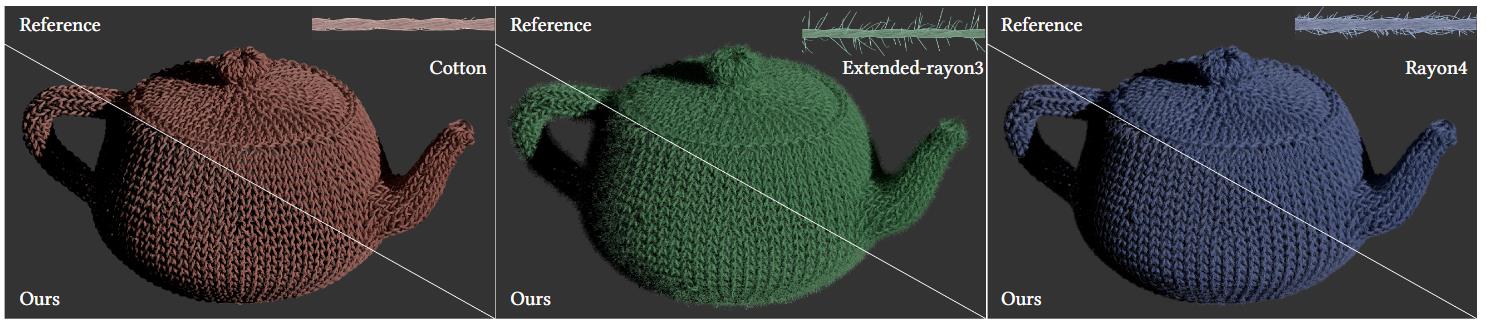

Knit teapot with three distinct types of flyaway configurations, cotton, extended-rayon3 (flyaway length is tripled for a fuzzier appearance), and rayon4. The bottom left and top right of each image compare our method with the reference. These models comprise 51M, 55M, and 61M fiber segments, respectively. Reference takes 9, 8.3, and 7 min, while ours only needs 5.1, 8.1, and 7.3 ms.

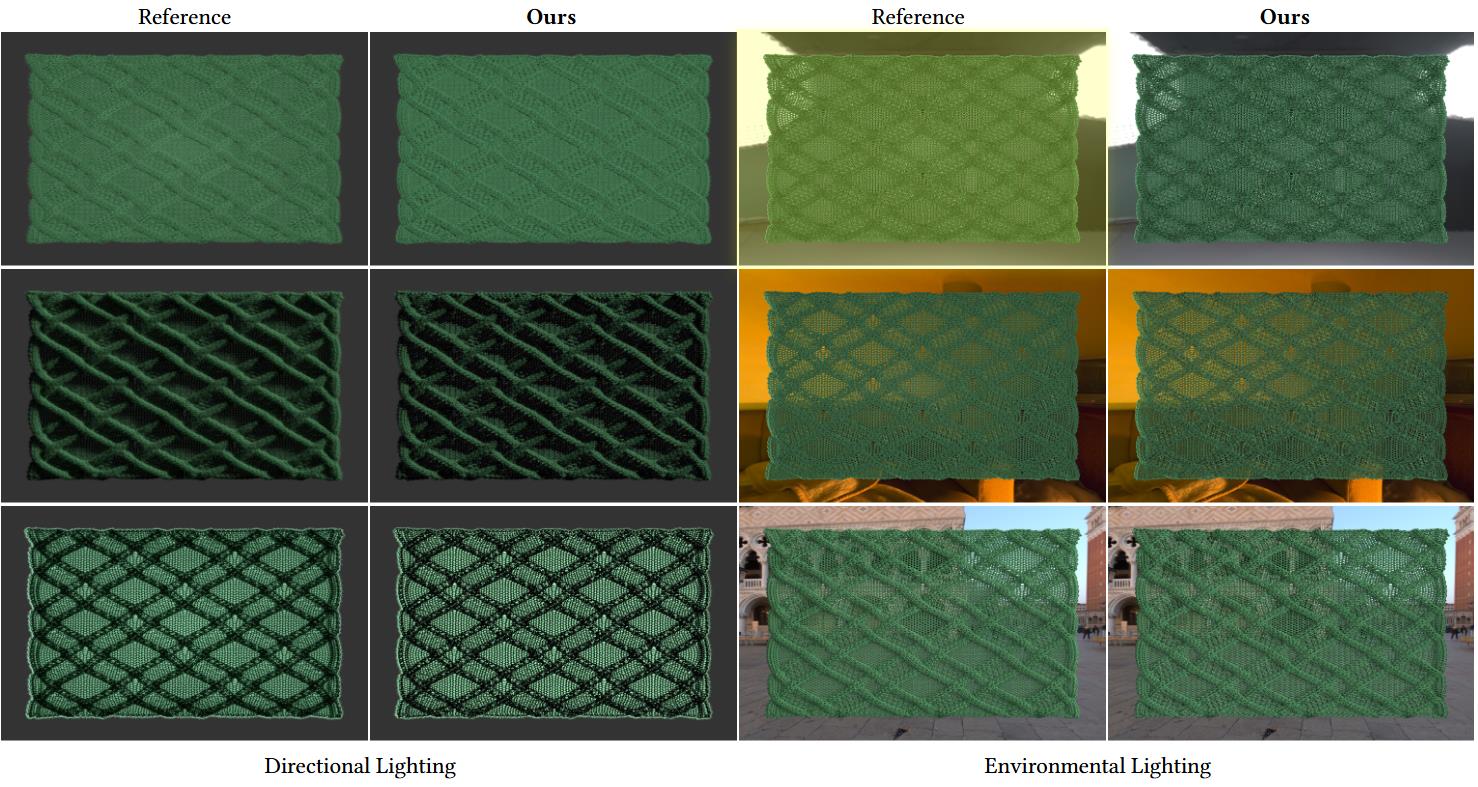

Our approach produces rendered results with equal quality to the reference for view and light direction angles of 15°, 75°, and 180°(left column), and under different environmental lighting (right column). The reference employs full fiber geometry with 118M segments and takes around 15 and 28 mins for directional and environment lights, while ours utilizes only 100K segments with only 9.4 and 20.6 ms, respectively.

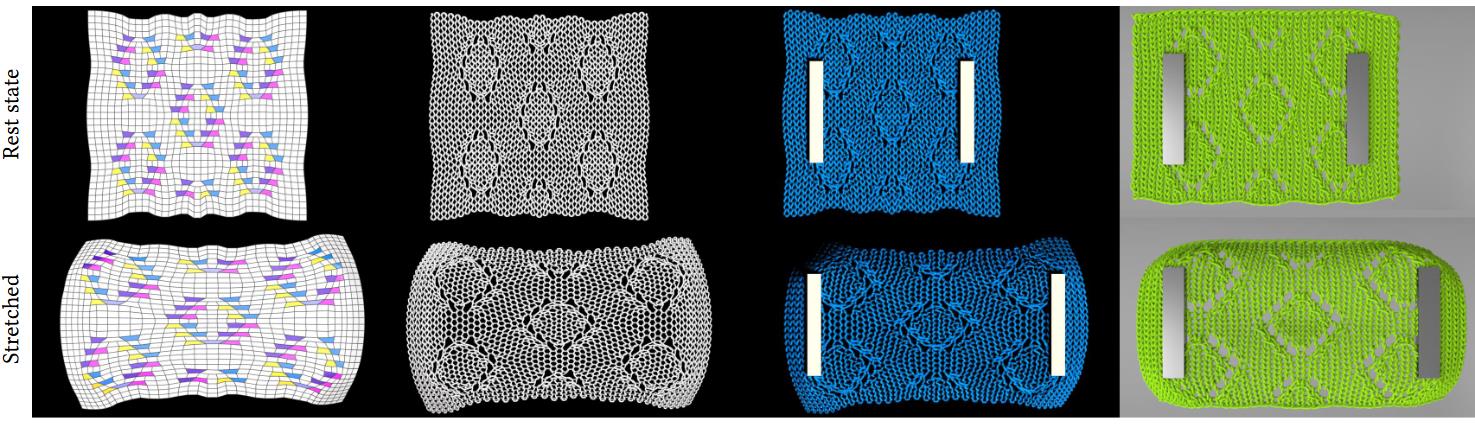

From left to right: stitch mesh, yarn geometry after our knot-based optimization, our final rendering result, and images of full yarn-level simulation result from Yuan et al . [2024]. Our simulation only takes 1 ms per frame, while full yarn-level simulation and volumetric homogenization [Yuan et al. 2024] take 7680 ms and 96 ms, respectively, per time step.