Computer Graphics Forum (Proceedings of Eurographics Symposium on Rendering 2025)

Real-time Level-of-detail Strand-based Rendering

Tao Huang,

Yang Zhou,

Daqi Lin,

Junqiu Zhu,

Ling-Qi Yan,

Kui Wu

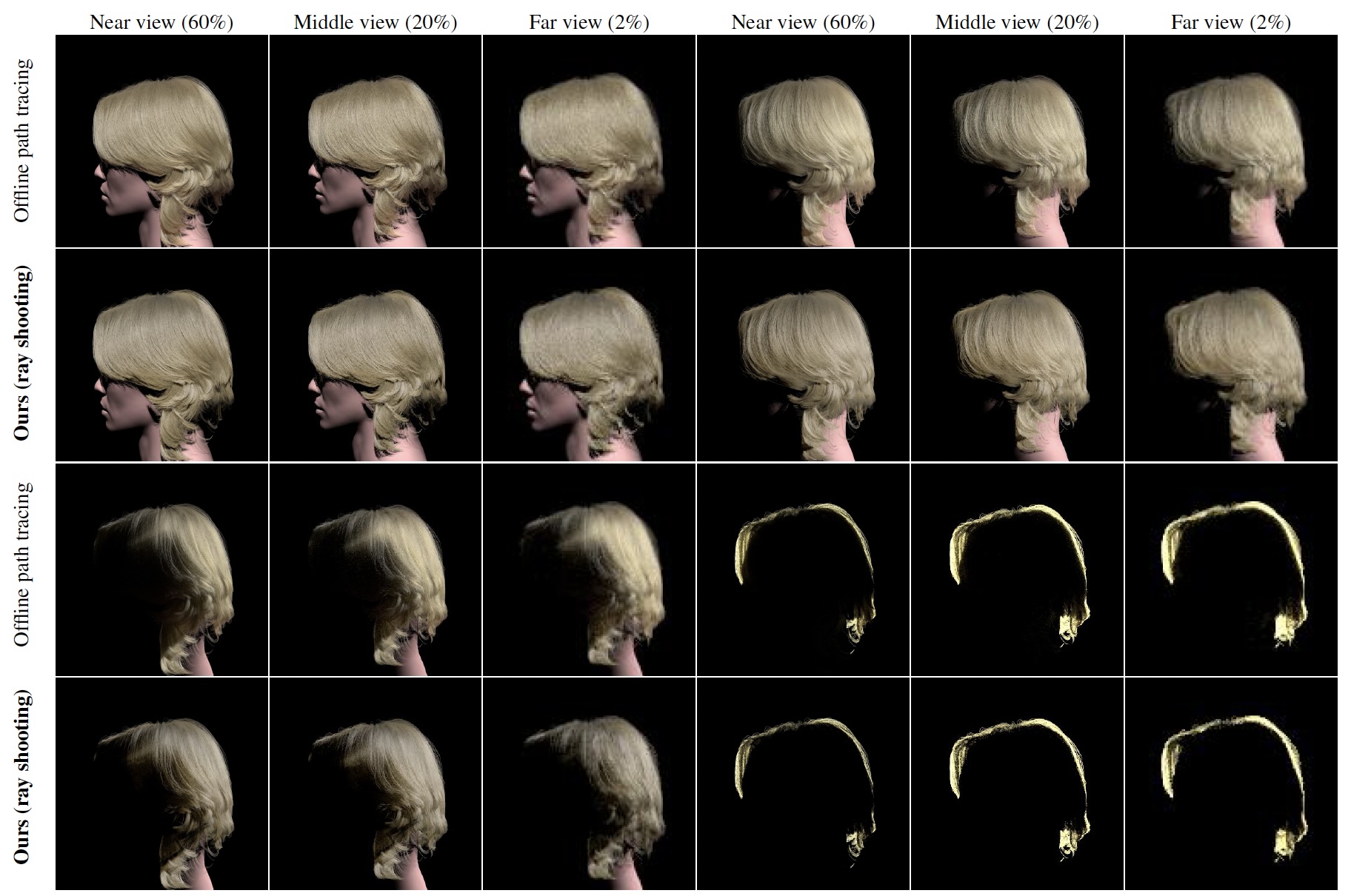

Our approach can yield results closely resembling those obtained from offline path tracing when the angles between the view direction and light direction are 0°, 45°, 90°, and 180°. It’s worth noting that offline path tracing employs full hair geometry with 1050K segments, while ours utilizes 1010K, 857K, and 418K segments for near, middle, and far views, respectively.

Abstract

We present a real-time strand-based rendering framework that ensures seamless transitions between different level-of-detail (LoD) while maintaining a consistent appearance. We first introduce an aggregated BCSDF model to accurately capture both single and multiple scattering within the cluster for hairs and fibers. Building upon this, we further introduce a LoD framework for hair rendering that dynamically, adaptively, and independently replaces clusters of individual hairs with thick strands based on their projected screen widths. Through tests on diverse hairstyles with various hair colors and animation, as well as knit patches, our framework closely replicates the appearance of multiple-scattered full geometries at various viewing distances, achieving up to a 13× speedup.

Paper [Preprint]

Video