ACM Transactions on Graphics (Proceedings of SIGGRAPH 2024)

Real-time Physically Guided Hair Interpolation

Jerry Hsu,

Tongtong Wang,

Zherong Pan,

Xifeng Gao,

Cem Yuksel,

Kui Wu

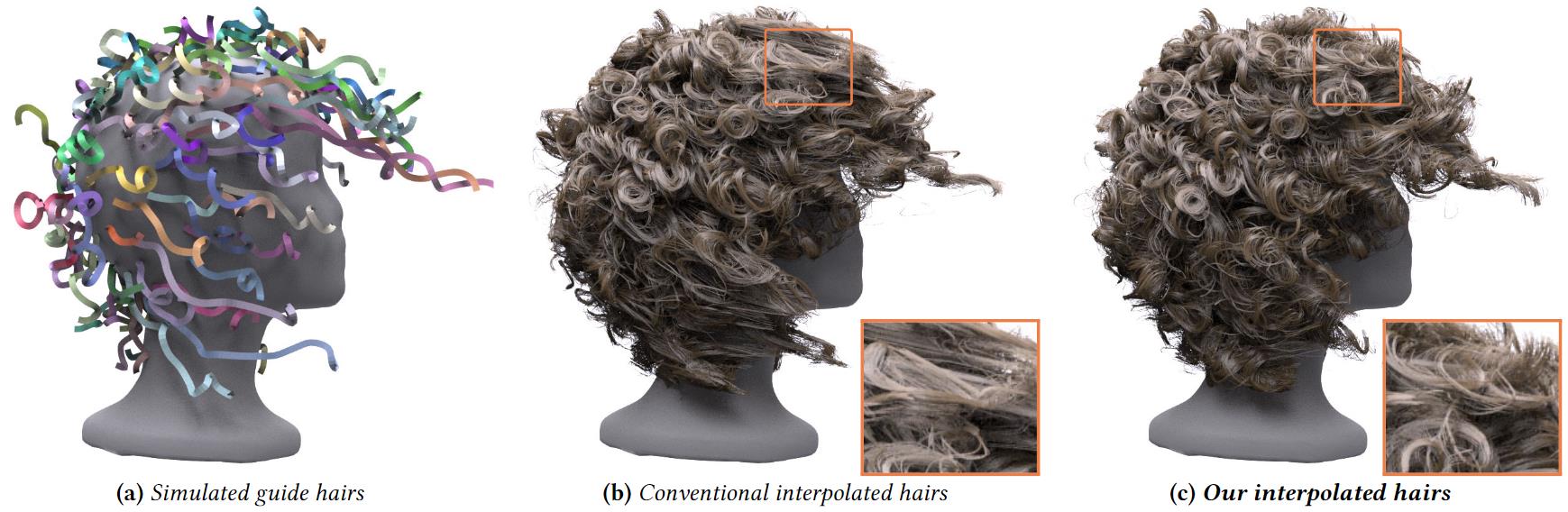

Interpolated rendered hairs from the given guide hairs. (a) the hair model with $128$ guide hairs; (b) 106K rendered hairs with 1.7M vertices interpolated from the guide hairs using Linear Hair Skinning (LHS) taking 0.28 ms per frame; (c) rendered hairs interpolated using our method taking 0.34 ms per frame. This is especially challenging for interpolation as almost no two guide hairs are the same shape.

Abstract

Strand-based hair simulations have recently become increasingly popular for a range of real-time applications. However, accurately simulating the full number of hair strands remains challenging. A commonly employed technique involves simulating a subset of guide hairs to capture the overall behavior of the hairstyle. Details are then enriched by interpolation using linear skinning. Hair interpolation enables fast real-time simulations but frequently leads to various artifacts during runtime. As the skinning weights are often pre-computed, substantial variations between the initial and deformed shapes of the hair can cause severe deviations in fine hair geometry. Straight hairs may become kinked, and curly hairs may become zigzags.

This work introduces a novel physical-driven hair interpolation scheme that utilizes existing simulated guide hair data. Instead of directly operating on positions, we interpolate the internal forces from the guide hairs before efficiently reconstructing the rendered hairs based on their material model. We formulate our problem as a constraint satisfaction problem for which we present an efficient solution. Further practical considerations are addressed using regularization terms that regulate penetration avoidance and drift correction. We have tested various hairstyles to illustrate that our approach can generate visually plausible rendered hairs with only a few guide hairs and minimal computational overhead, amounting to only about 20% of conventional linear hair interpolation. This efficiency underscores the practical viability of our method for real-time applications.

Paper [Preprint]

Video

Results

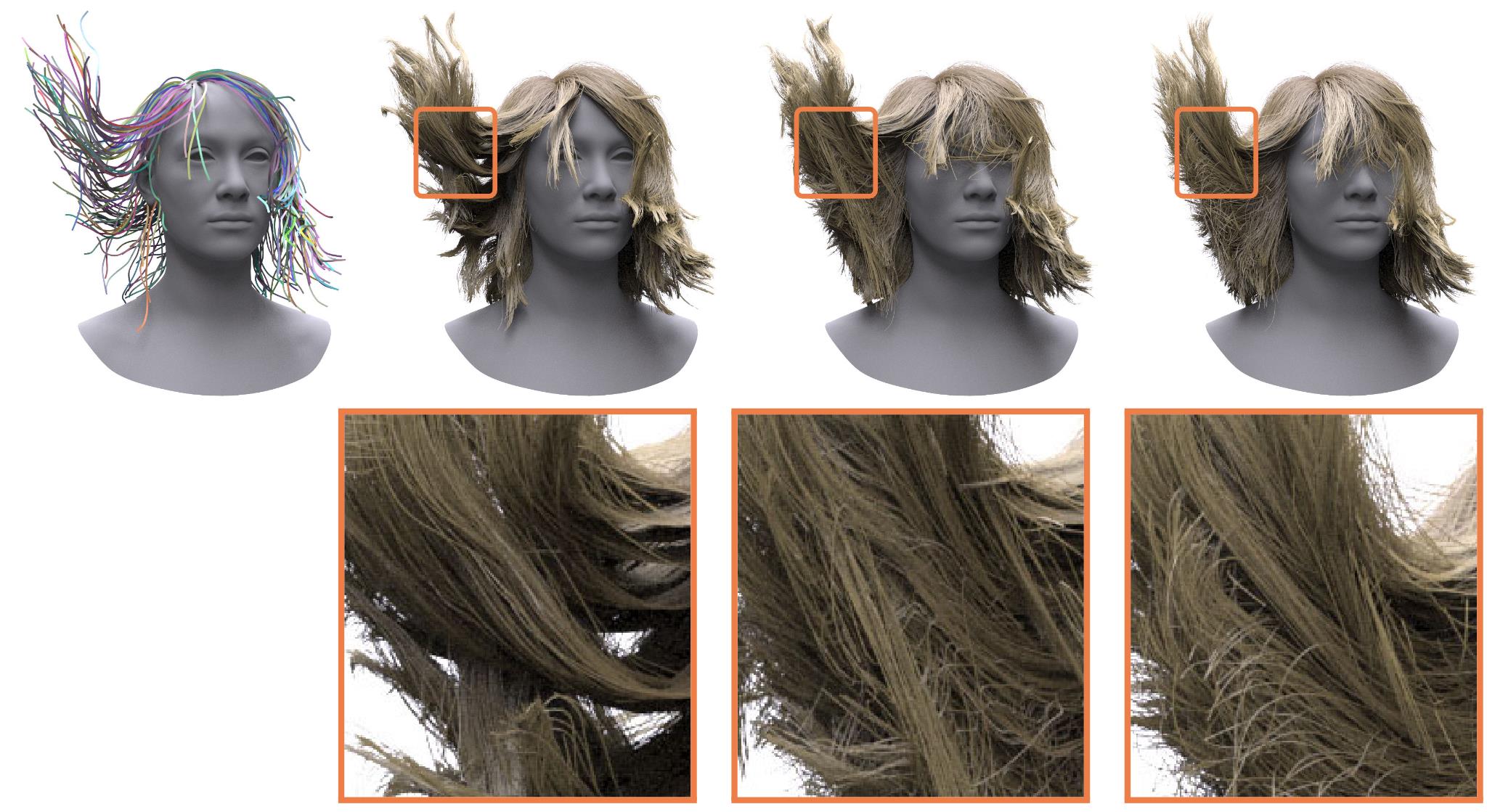

Even with straight hairstyles, both one (LHS single) and triple (LHS) guides per rendered hair introduce odd clumping. Despite the reliance on the same LHS scheme for interpolating forces, our method can effectively remove the various artifacts in geometry.

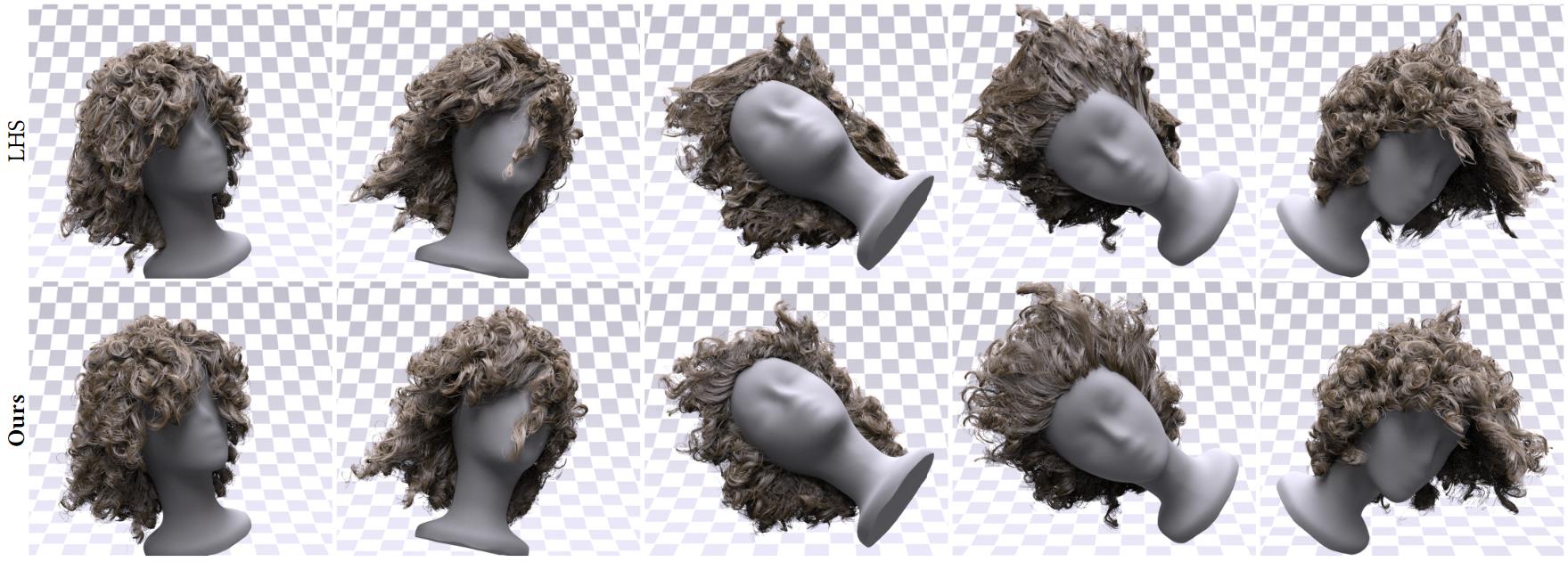

Large separations in guide hairs can often cause errors in LHS weights to amplify. Although we do not use any dynamic guide or weight selection, our method remains resistant to geometric artifacts.

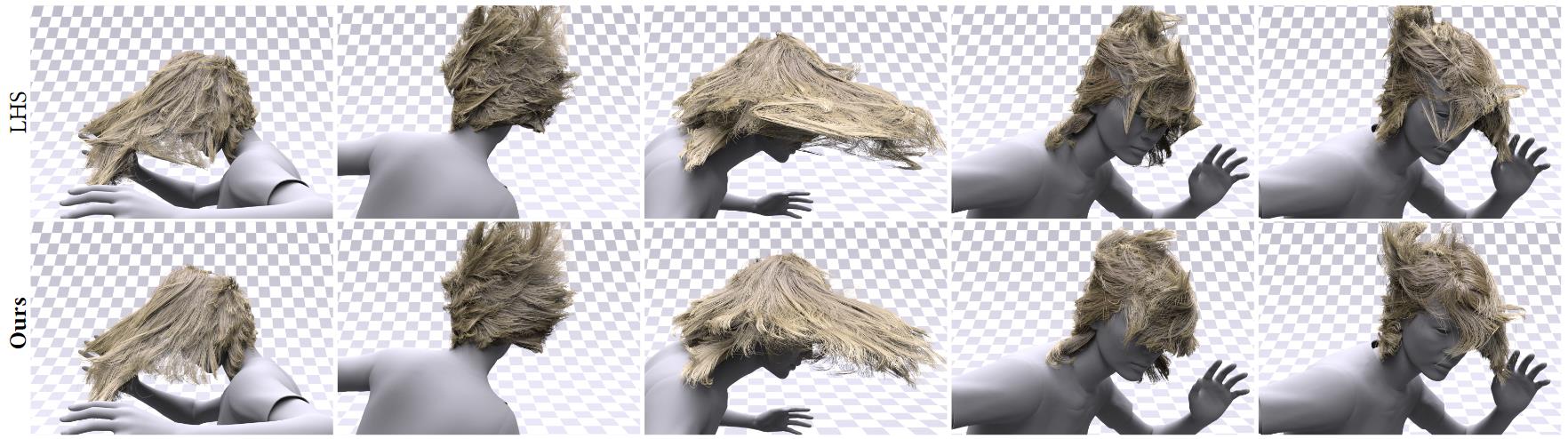

Despite only using 128 guide hairs, our interpolation remains robust under the large perturbations common in motion capture data.

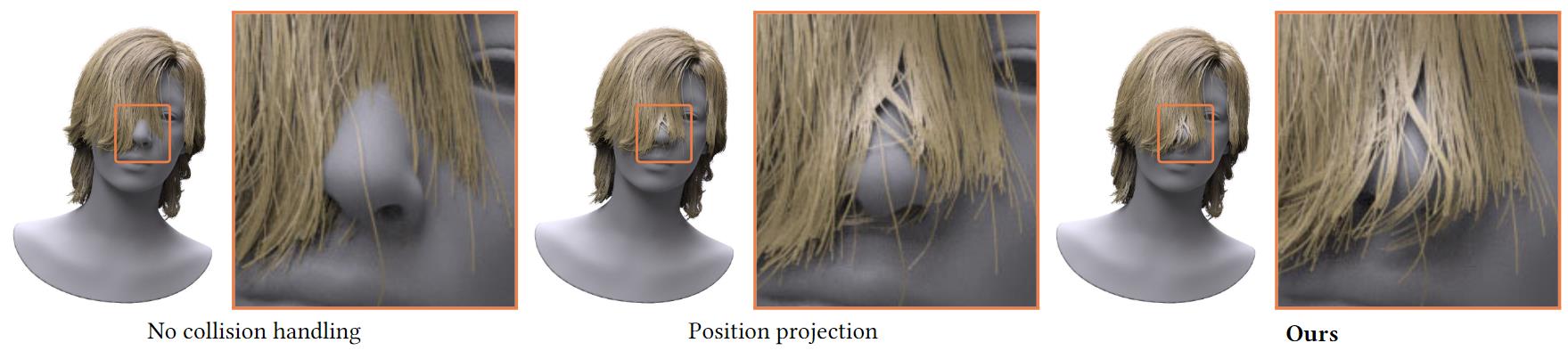

Directly projecting positions may result in vertices on alternating sides of a protrusion. By taking bending energies into consideration, our SDF penalty energy avoids this issue and produces strands that favor a consistent projection direction.